Permitting Agentic AI Workflow

How Agentic AI could shift the paradigm for permitting

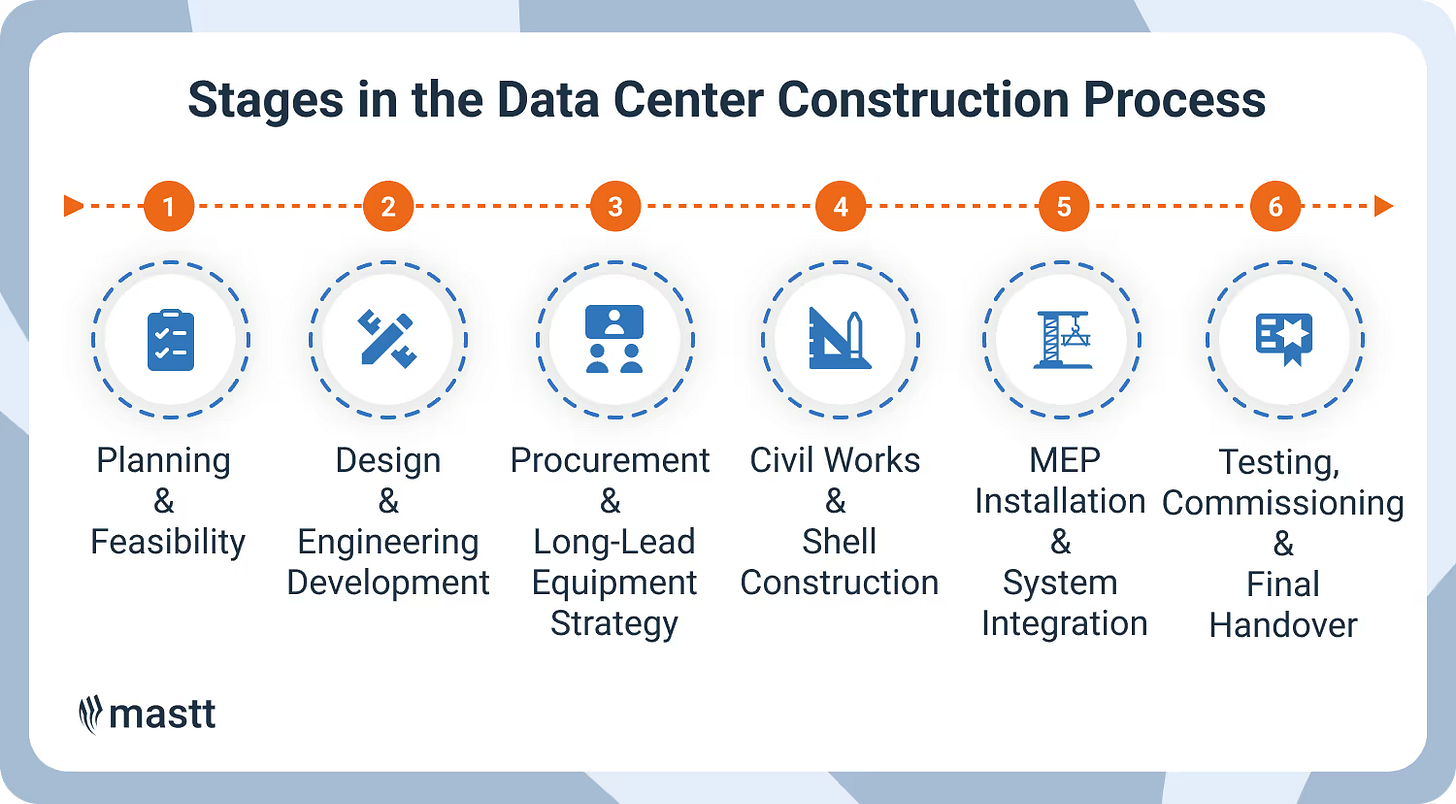

A full data center build — from concept to commissioning — typically takes 18 to 30 months, according to industry analyses from Mastt. That is the construction timeline alone.

Before any of that begins, most projects spend years waiting for various approvals.

A 2024 report by Trellis Network found more than 1,000 clean-energy projects stuck in federal permitting queues, with an average delay of about four years.

Large-scale data centers often face the same web of environmental, zoning, and interconnection reviews. Permitting is a key safeguard for accountability but today, it has also become one of the largest bottlenecks in the digital economy.

The Anatomy of a Delay

A modern data center must secure approvals for land use, environmental impact, water rights, grid interconnection and if they want to build additional power source behind the meter then permits for utility grade power plants as well. Each involves different agencies, databases, and timelines.

Clearing local zoning may take months, but a transmission interconnection study can last years. Utilities must run load-flow analyses. Federal regulators repeat environmental reviews under NEPA. Each revision resets the clock.

While physical construction has become faster thanks to modularization and standardized design, the permitting process remains analog — sequential, paper-bound, and often opaque.

The Software Behind the Curtain

Over the last decade, many platforms have emerged to streamline parts of this process. Most focus on local governments or residential projects. A few, though, target large enterprises and regulators handling complex infrastructure.

Each of these companies addresses a meaningful pain point. Despite that there is room for a more agentic application that is able to take the permitting process to the next level.

The Missing Layer

A developer might use Transect to analyze land constraints, OpenGov to file applications, Enablon for environmental compliance, and Conductor AI for documentation control.

Each platform adds efficiency, yet none connect to a shared system that spans jurisdictions and agencies.

That’s where an Agentic AI layer could fit — a permitting agent that can reason across data, law, and geography.

Imagine a network of AI agents that can:

Read and interpret FERC, EPA, and DOE regulations.

Cross-check proposed sites against floodplains, wetlands, and protected zones using live GIS data.

Generate draft filings with pre-filled citations and supporting evidence.

Coordinate directly with regulator APIs to flag conflicts before submission.

Model interconnection queues and suggest alternative sequencing to shorten timelines.

Model permitting timelines for building behind the meter power plants to expedite time to power

Each agent specializes in one domain — environmental modeling, legal interpretation, or interconnection forecasting — and shares context through an orchestration layer.

This is about making human oversight efficient, transparent and data-driven.

Why Data Centers Feel It First

No industry feels the permitting bottleneck today more acutely than data centers. Each hyperscaler site touches multiple layers of review — zoning, utilities, environmental boards, and safety agencies. If one stalls, the entire project drags. When a substation permit slips six months, capacity slips with it. The stakes are higher now. Each delay constrains AI growth, inflates energy demand forecasts, and slows grid modernization.

At the same time, regulators face public pressure for transparency. Communities want clarity on water and emissions. Developers want predictable pathways.

A permitting workflow built on Agentic-AI layers for data centers could serve all sides — giving regulators explainability, developers foresight, and citizens confidence that the process is fair and evidence-based.

Building the Prototype

This doesn’t need to start at global scale. One region, one data source, one workflow is enough.

Take Virginia Energy’s open datasets. They include parcels, transmission lines, and environmental overlays — all prerequisites for feasibility analysis.

A prototype could:

Pull that data dynamically through an MCP layer.

Apply AI models trained on zoning and environmental codes.

Generate risk-weighted site reports and draft permits.

Send structured documentation for review by human experts.

Log every decision in a transparent ledger using Open Permit Data Standards.

Each new permit processed this way becomes training data for the next — improving precision and consistency over time.

The Future of Permitting Is Intelligent

Permitting delays are already slowing the clean-energy transition. They’re now slowing the digital one too. If Agentic AI is given the right tools, constraints and goals it can help speed up the process from all ends.

Where would you start if you were building this prototype?